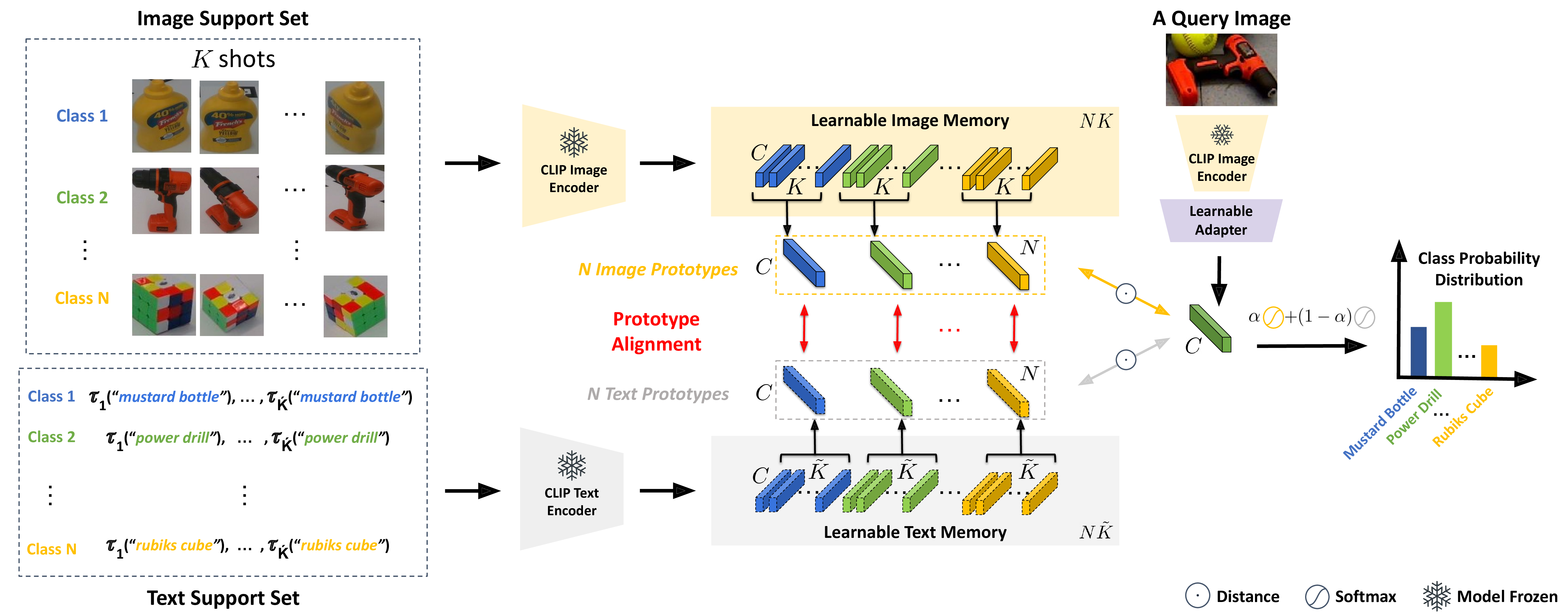

The CLIP image encoder and text encoder are frozen during training. The image memory, the text memory and the adapter network are learned. Given a class name, τi returns the ith out of K predefined text prompts.

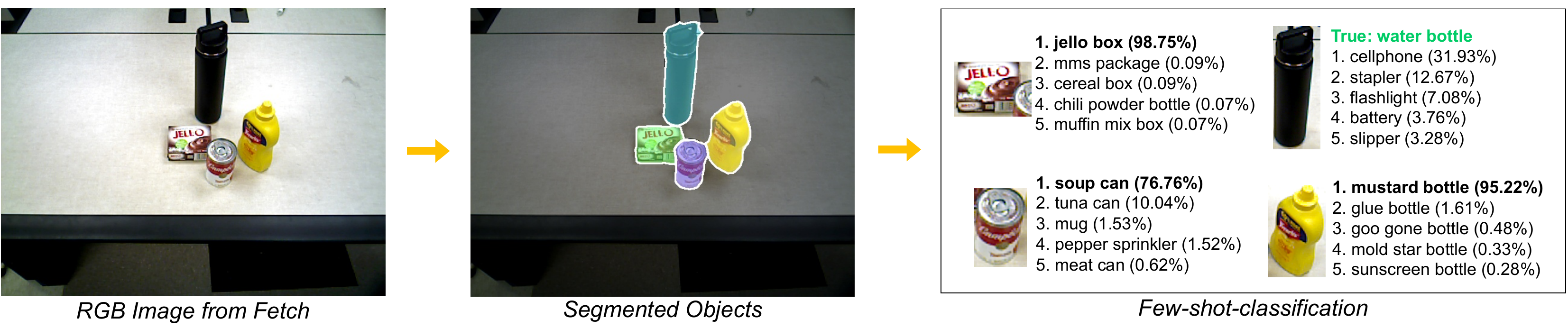

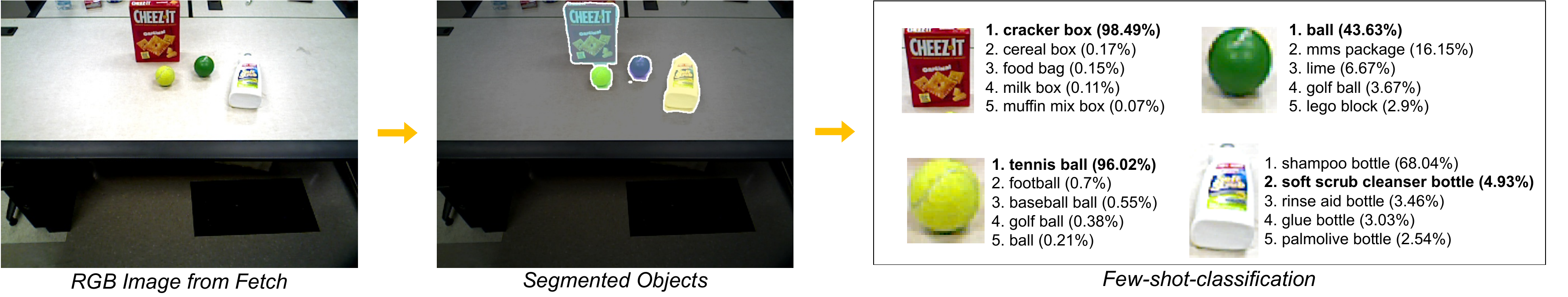

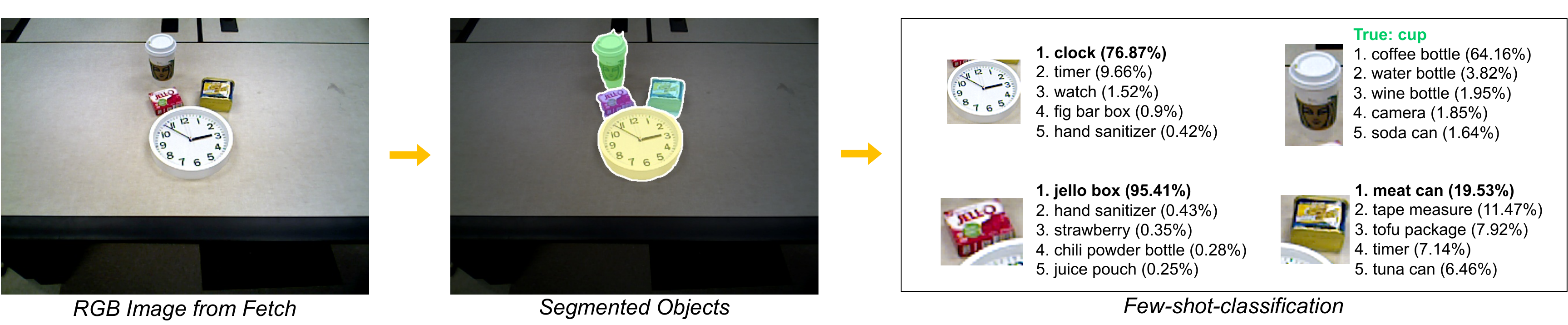

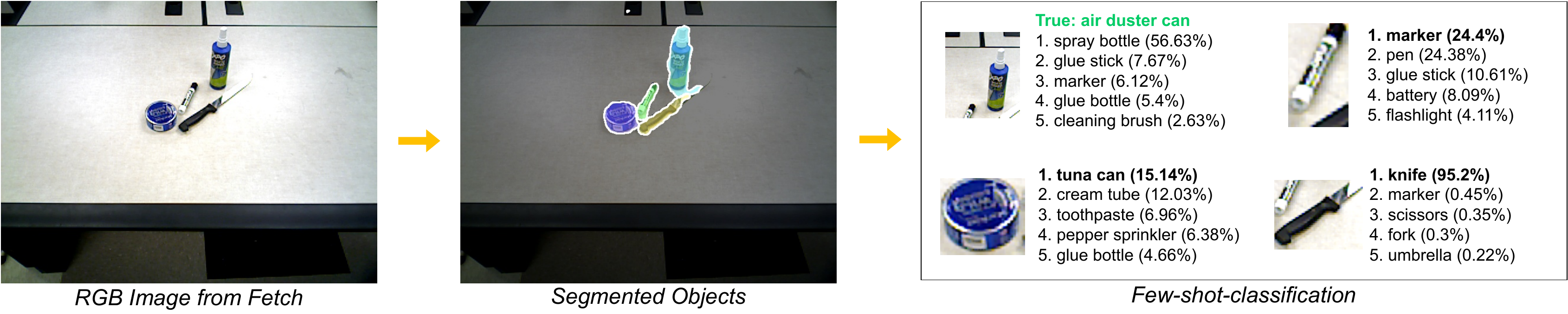

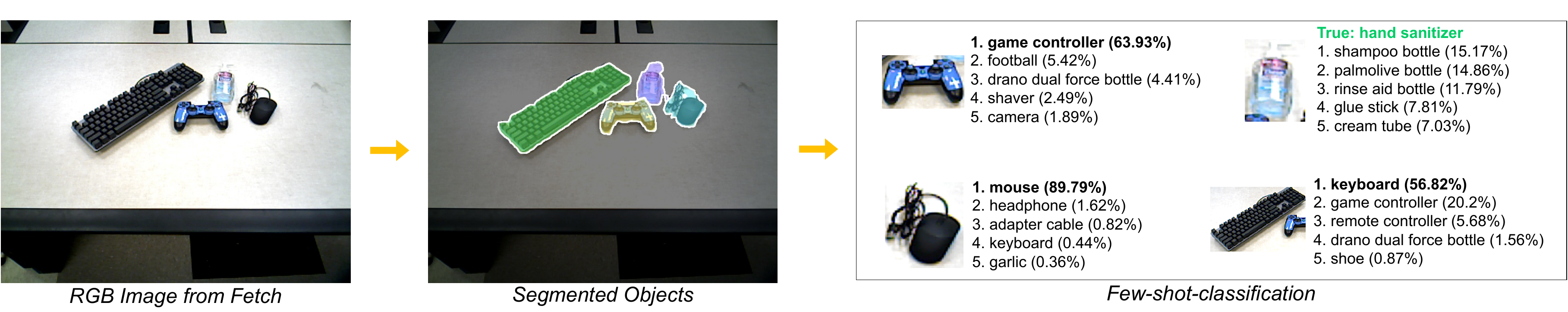

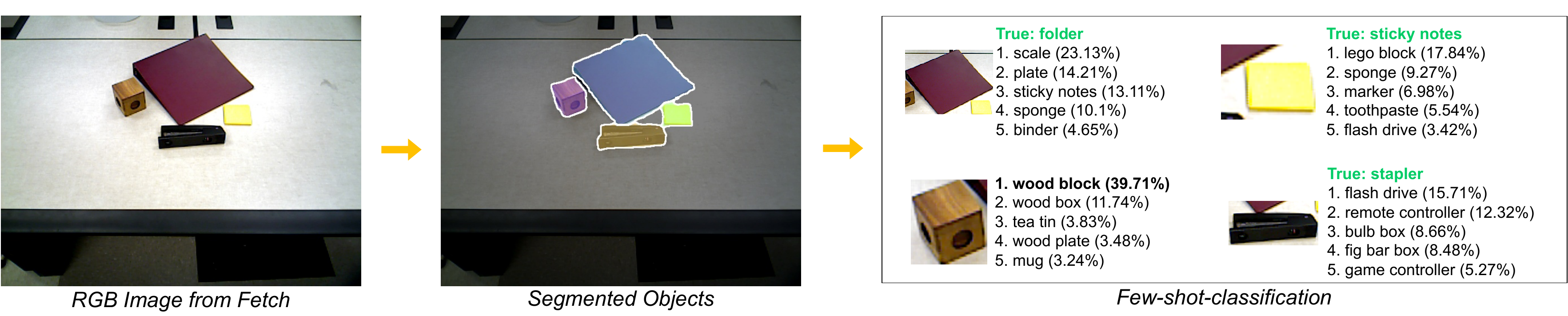

User command oriented robot grasping using Proto-CLIP predictions in the real world. [ 4 Scenes ]

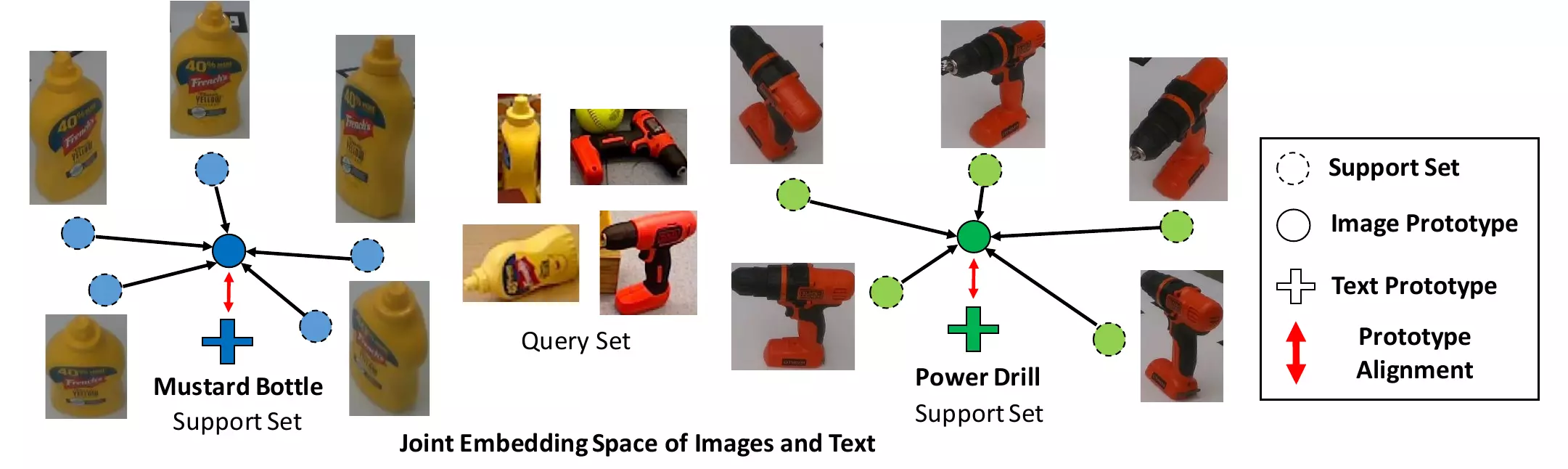

(a) Image and text

prototypes from zero-shot CLIP, which are not aligned

(b) Aligned image and

text prototypes from

fine-tuned Proto-CLIP

Barnes-Hut t-SNE visualization using fine-tuned Proto-CLIP

trained on FewSOL [198 classes] dataset.

Here, image and text prototypes

are aligned closer to each other. Objects with similar shapes are closer.

Semantics are captured as well, e.g.

vegetables/fruits are closer to each other.

Zoom-In to take a closer look.

@article{padalunkal2023protoclip,

title={Proto-CLIP: Vision-Language Prototypical Network for Few-Shot Learning},

author={Jishnu Jaykumar P and Kamalesh Palanisamy and Yu-Wei Chao and Xinya Du and Yu Xiang},

archivePrefix={arXiv},

eprint={2307.03073},

year={2023}

}

This work was supported in part by the DARPA Perceptually-enabled Task Guidance (PTG) Program under contract number HR00112220005.