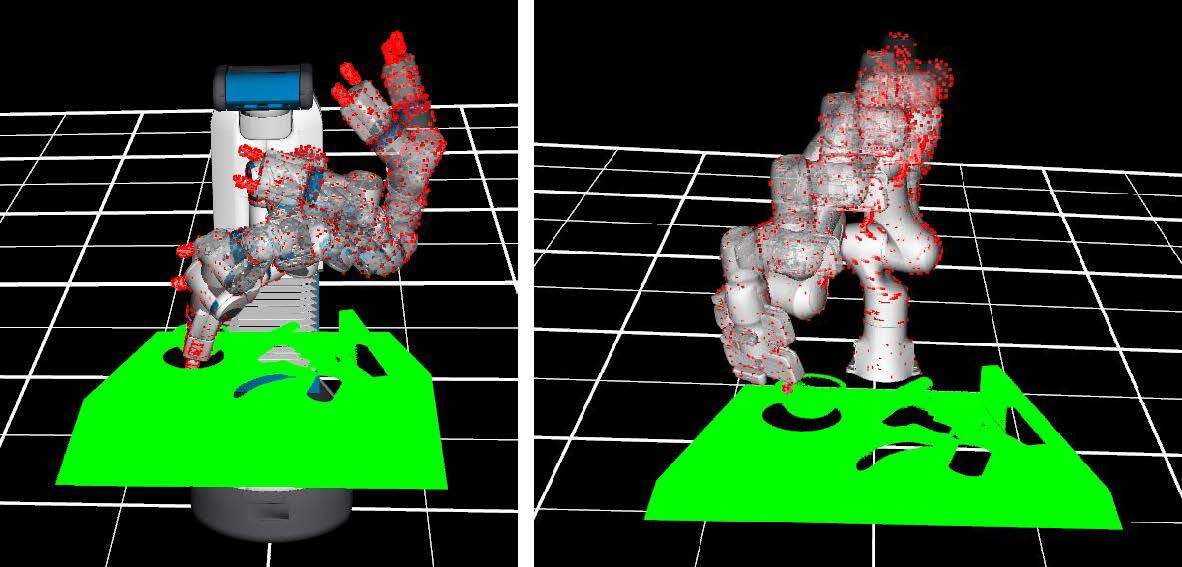

We introduce a new trajectory optimization method for robotic grasping based on a point-cloud representation of robots and task spaces. In our method, robots are represented by 3D points on their link surfaces. The task space of a robot is represented by a point cloud that can be obtained from depth sensors. Using the point-cloud representation, goal reaching in grasping can be formulated as point matching, while collision avoidance can be efficiently achieved by querying the signed distance values of the robot points in the signed distance field of the scene points. Consequently, a constrained non-linear optimization problem is formulated to solve the joint motion and grasp planning problem. The advantage of our method is that the point-cloud representation is general to be used with any robot in any environment. We demonstrate the effectiveness of our method by conducting experiments on a tabletop scene and a shelf scene for grasping with a Fetch mobile manipulator and a Franka Panda arm.

1. SceneReplica: Benchmarking Real-World Robot Manipulation by Creating Reproducible Scenes

Ninad Khargonkar, Sai Haneesh Allu, Yangxiao Lu, Jishnu Jaykumar P, Balakrishnan Prabhakaran, Yu Xiang

In International Conference on Robotics and Automation (ICRA), 2024.

@misc{xiang2024grasping,

title={Grasping Trajectory Optimization with Point Clouds},

author={Yu Xiang and Sai Haneesh Allu and Rohith Peddi and Tyler Summers and Vibhav Gogate},

year={2024},

eprint={2403.05466},

archivePrefix={arXiv},

primaryClass={cs.RO}}Send any comments or questions to Yu Xiang: yu.xiang@utdallas.edu

This work was supported in part by the DARPA Perceptually-enabled Task Guidance (PTG) Program under contract number HR00112220005 and the Sony Research Award Program.